The Pilot Guide to A/B Testing A/B Testing is crucial for e-commerce businesses to optimise their marketing efforts and drive better results. Read our guide to A/B Testing - what it is, why you need it, and how you know you’re doing it right.

A/B testing, also known as split testing, is used in digital marketing to trial and test the most effective version of your online experience for your target audience. By running A/B test experiments, you can compare different options of websites, ads, imagery, audiences, and more, allowing you to discover what works best for your audience and continuously improve your digital strategy to deliver greater returns.

How to Run an A/B Test:

01. Define clear objectives

Clearly define the goals you want to achieve through A/B Testing. For example, you might aim to increase click-through rates, improve conversion rates, or reduce bounce rates. Clear objectives will guide your testing process and help you accurately measure success.

02. Identify Areas for Improvement

Once you’ve set the objective, the next step is to identify the variable you are going to test. Depending on the area you’re looking to improve, you could be comparing different landing pages, email campaigns, or advertising campaigns. If the focus is on testing creative assets, your variables may include headlines, images, colours, button placements, copy variations, or different layouts. Remember to focus on one variable at a time to clearly understand the impact of what you’re testing.

03. Develop a Hypothesis

Next, come up with a hypothesis that describes what you are comparing and why you think it will bring better results. For example, if your content isn’t generating conversions, you might hypothesise that high-intent converting audiences prefer static content that focuses solely on the product.

Your hypothesis could be something like this: "By using static content that highlights the product, I can increase the conversion rate among this audience."

04. Create & Run an Experiment

Develop multiple versions of your marketing asset, making one change at a time based on the variable you're testing. Ensure each version is distinct and randomly shown to your audience.

Randomly divide your target audience into two or more groups, with each group exposed to a different variation of your marketing asset. It's crucial to have a sufficiently large sample size to obtain statistically significant results. Many marketing tools like Meta, Klaviyo, Mailchimp, and Google Ads have built-in A/B testing functionality to make this process easier.

Remember to run the test for an adequate duration to gather accurate data that considers fluctuations and changes that may occur over specific time periods.

05. Track and Measure Performance

Use analytics tools to track and measure the performance of each variation. Key metrics to consider include conversion rates, engagement metrics, click-through rates, bounce rates, or revenue generated. Statistical significance testing can help you determine if the observed differences are statistically meaningful and whether your hypothesis holds true.

The reliability of your analysis relies on the sample size of the experiment. A larger number of user interactions leads to more accurate conclusions about the impact of the variations on your campaign results. Ensure you consider additional factors such as user feedback and qualitative insights to gain a comprehensive understanding.

06. Implement changes and iterate

Based on your analysis, implement the changes from the winning variation. Continuously iterate and test new variations to optimise your marketing efforts and provide the best experience for your audience.

Use Case Example

As the email marketing partner for a New Zealand designer womenswear label, we wanted to determine whether we could create more engaging campaigns for Sale emails. We ran an A/B test within the Klaviyo email platform to see how a new layout would perform against the primary layout we had used for most other emails.

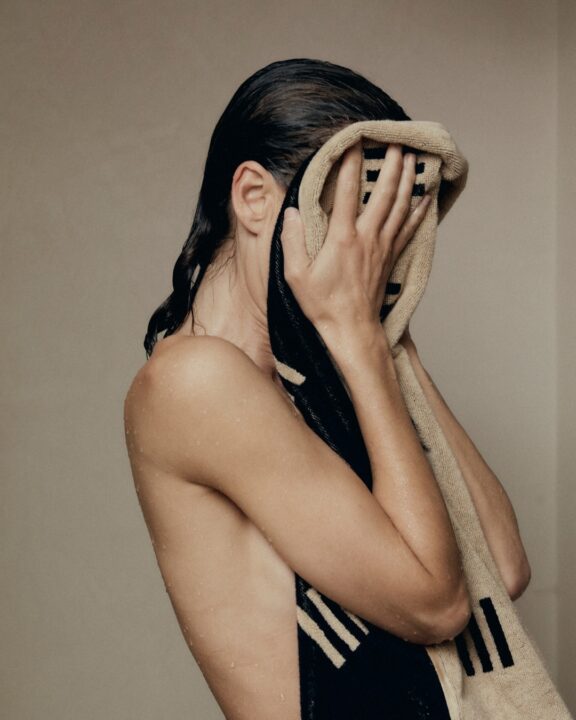

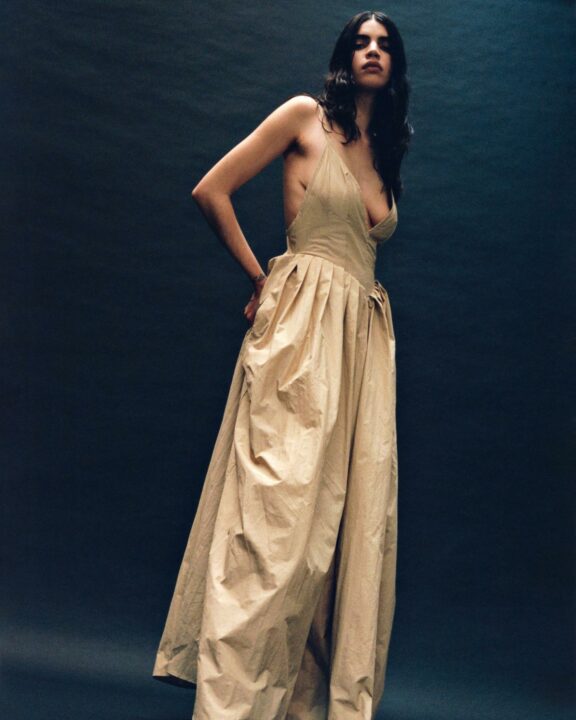

Variant A consisted of a hero image, text block, and button and featured a selection of 5x items from the sale, using both campaign imagery and product clear-cuts. Variant B did not showcase any product and instead just had a single hero GIF flashing the same campaign imagery with sale text overlayed on the image, while the copy and buttons were the same.

To run the test in-platform, Klaviyo sent the two emails each to small test groups for the first 6 hours. The winner was determined by which of the two email variations had received a higher click rate.

The GIF layout was the winning variant, with a click rate of 5.91%, compared to 5.05%. This variant was then sent out to the remainder of the database.

The results showed us that the flashing GIF layout was an effective way to communicate sales messaging and helped us confirm our thinking that a much simpler layout with key messages, particularly for sale periods or key messages, is effective and drives higher click-through.

Key Takeaway

A/B testing enables you to make informed data-driven decisions to target problem metrics or ensure your digital marketing efforts are as effective as possible. Done correctly, the results of an A/B test will allow you to reap several benefits, including enhanced user engagement, increased conversion rates, reduced bounce rates, and higher click-through rates.

This approach is often seen as low-risk since it reduces expenditure on unsuccessful ideas and ultimately boosts future profits. The true value lies in the invaluable insights and knowledge gained about your audience's preferences, which should be implemented across all aspects of your digital strategy, streamlining your advertising approach for optimal results.